Hello Incognito,

I’d like to report some weirdness happening with my vNodes as reported by the Incognito Node Monitor website - https://monitor.incognito.org/node-monitor

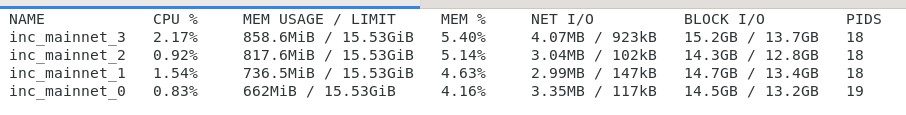

Below is a screen shot of my Docker container “Stats” on my vNode that has 4 nodes running:

But when I run the command sudo docker ps:

what worries me is the status is “Up 25 mins”. I’m not a super technical person, but this tells me something caused my node to go offline.

If this is correct, how can I trouble shoot what the issue is?

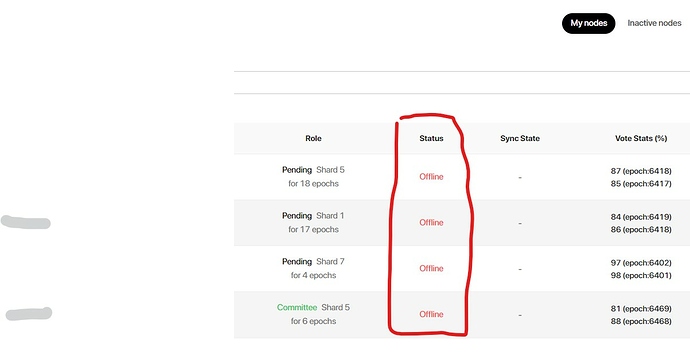

The Node Monitor website my 4 nodes appear as offline:

This issue of my node going online/offline has been happening for several weeks now.

I have not made any changed to my PC (not not installed any other software). But I did applied the latest updates to Ubuntu 20.04.4 LTS and have restarted my node yesterday.

Does anyone have any ideas what may be going wrong with my vNodes that seems to go online/offline on a frequent basis (a few times a day) as reported by the Incognito Node Monitor?

Thanks for any help provided.