Important

I’m not maintaining the code anymore. If you are looking for a hassle-free way to host your nodes, consider my hosting service: no private keys required and monthly payments based on earnings.

Hosting service for vNodes. Website fully automated

Also: don’t update to versions beyond 2.0. Those are not for intended for the public but for my hosting service.

I have created a node script that will automatically create hard-links to every .ldb file that you may have duplicated in your /home/incognito directory.

I’ve tested it my self and nothing has changed with my nodes: all of my nodes are still working the same, except that now I have around 200 GB more as free space.

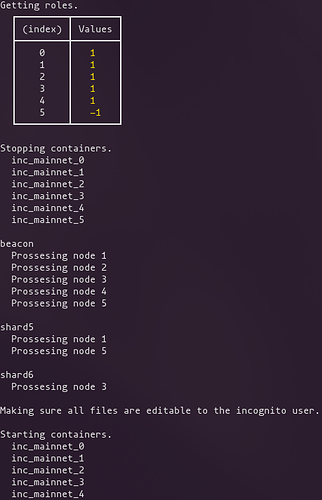

You could create a cronjob to run this once a day without needing to worry if your nodes are or not in committee, because the script will check that for you and skip them if they are, to prevent slashing.

https://github.com/J053Fabi0/Duplicated-files-cleaner-Incognito

Update

You could use the Deno version, with some extra features, like setting your own instructions or copying from a fullnode. I recommend everyone to use Deno instead of Node.

https://github.com/J053Fabi0/Duplicated-files-cleaner-Incognito/tree/deno

If this helped you and you want to say thanks, consider doing so in the memo of a donation:

12sdoBt4XsFUmNiyik3ZiuKMZnA9wv8cTf6QLctNQTeMrCu5HEkSXjPZF7KC2ncfLuGTW9sAUAeU59gVpoyydtWtP2KcYrrCuNRfWJ4q5jsqpaRwLet8TLLvgH6zoBaYr7dDoSH8WVYqpPycLzG3

Important update

Important update